I first started using Polars when working on an IoT project. Before that, I used Pandas for processing and deep analytics on IoT data.

But day by day, the amount of data kept growing. The budget for the data project was limited, and when I tried to request a bigger compute resource to scale Spark, it was rejected. We only had one Airflow instance with 2 vCPUs and 8GB RAM.

My Airflow jobs ran every 30 minutes, creating a near real-time pipeline for the dashboard.

Eventually, bottlenecks appeared when processing 3–5 million rows per hour. The Airflow tasks turned into zombies because Pandas consumed too much memory. I tried splitting the data into chunks of 10k rows, but that made the process slower, and sometimes tasks got stuck because the previous tasks hadn’t finished.

At that point, I really wanted to refactor the entire pipeline. That’s when I discovered Polars, which claimed to be faster than Pandas since it’s built with Rust.

I tested Polars in my playground, and the results showed that processing was indeed much faster than Pandas. Polars also used less memory, and the best part was its lazy evaluation feature, it doesn’t execute line by line, but instead optimizes and executes the whole plan only when the final result is needed.

Polars leverages Apache Arrow as its in-memory columnar data format, which makes processing more efficient and reduces memory overhead.

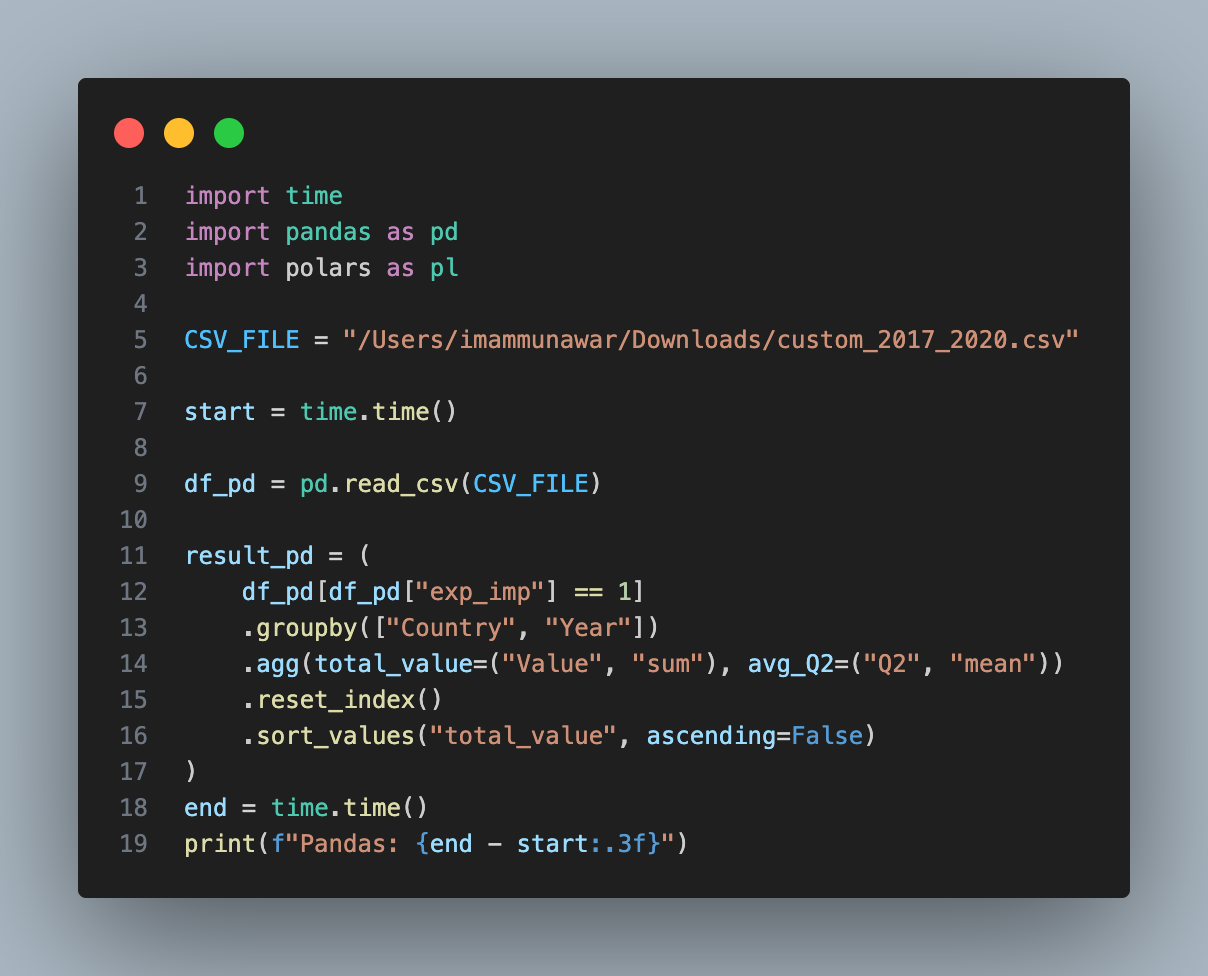

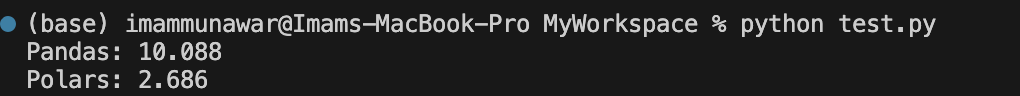

You can see image in the below, I'm trying to compare pandas and polars when read and doing simple transformation the csv file with size +900MB.

pandas take time: 10.08s, while polars just take 2.68s.

You can read the documentation here:

https://docs.pola.rs/api/python/stable/reference/dataframe/index.html